Generative pre-trained transformers (GPT) are a type of large language model (LLM) and a prominent framework for generative artificial intelligence. They...

46 KB (4,092 words) - 16:40, 16 July 2024

ChatGPT (redirect from Chat Generative Pre-trained Transformer)

misinformation. ChatGPT is built on OpenAI's proprietary series of generative pre-trained transformer (GPT) models and is fine-tuned for conversational applications...

191 KB (16,504 words) - 02:47, 26 July 2024

GPT-3 (redirect from Generative Pre-trained Transformer 3)

Generative Pre-trained Transformer 3 (GPT-3) is a large language model released by OpenAI in 2020. Like its predecessor, GPT-2, it is a decoder-only transformer...

54 KB (4,914 words) - 19:17, 6 July 2024

GPT-4 (redirect from Generative Pre-trained Transformer 4)

Generative Pre-trained Transformer 4 (GPT-4) is a multimodal large language model created by OpenAI, and the fourth in its series of GPT foundation models...

61 KB (5,893 words) - 14:18, 26 July 2024

GPT-2 (redirect from Generative Pre-trained Transformer 2)

Generative Pre-trained Transformer 2 (GPT-2) is a large language model by OpenAI and the second in their foundational series of GPT models. GPT-2 was pre-trained...

44 KB (3,260 words) - 10:30, 25 July 2024

GPT-1 (category Generative pre-trained transformers)

Generative Pre-trained Transformer 1 (GPT-1) was the first of OpenAI's large language models following Google's invention of the transformer architecture...

32 KB (1,064 words) - 15:45, 8 May 2024

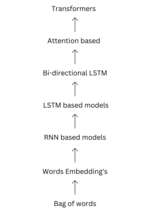

development of pre-trained systems, such as generative pre-trained transformers (GPTs) and BERT (Bidirectional Encoder Representations from Transformers). In 1990...

70 KB (8,736 words) - 18:33, 26 July 2024

advancements in generative models compared to older Long-Short Term Memory models, leading to the first generative pre-trained transformer (GPT), known as...

119 KB (10,459 words) - 01:11, 26 July 2024

GPT-4o (category Generative pre-trained transformers)

GPT-4o (GPT-4 Omni) is a multilingual, multimodal generative pre-trained transformer designed by OpenAI. It was announced by OpenAI's CTO Mira Murati during...

14 KB (1,536 words) - 19:37, 26 July 2024

Google, that introduced a new deep learning architecture known as the transformer based on attention mechanisms proposed by Bahdanau et al. in 2014. It...

5 KB (432 words) - 00:49, 18 July 2024

Generative pre-trained transformer, a type of artificial intelligence language model ChatGPT, a chatbot developed by OpenAI, based on generative pre-trained...

1,006 bytes (133 words) - 02:30, 11 June 2024

DALL-E (redirect from Contrastive Language-Image Pre-training)

Initiative. The first generative pre-trained transformer (GPT) model was initially developed by OpenAI in 2018, using a Transformer architecture. The first...

66 KB (4,242 words) - 18:13, 25 July 2024

GPT-J (category Generative pre-trained transformers)

developed by EleutherAI in 2021. As the name suggests, it is a generative pre-trained transformer model designed to produce human-like text that continues from...

11 KB (981 words) - 02:56, 11 June 2024

Generative Pre-trained Transformer 4Chan (GPT-4chan) is a controversial AI model that was developed and deployed by YouTuber and AI researcher Yannic Kilcher...

9 KB (1,127 words) - 14:23, 25 June 2024

IBM Watsonx (category Generative pre-trained transformers)

Watsonx is IBM's commercial generative AI and scientific data platform based on cloud. It offers a studio, data store, and governance toolkit. It supports...

7 KB (598 words) - 07:29, 26 June 2024

OpenAI Codex, which is a modified, production version of the Generative Pre-trained Transformer 3 (GPT-3), a language model using deep-learning to produce...

17 KB (1,673 words) - 13:12, 10 July 2024

long stretches of contiguous text. Generative Pre-trained Transformer 2 ("GPT-2") is an unsupervised transformer language model and the successor to...

185 KB (16,007 words) - 22:08, 27 July 2024

Artificial intelligence (section Generative AI)

meaning), transformers (a deep learning architecture using an attention mechanism), and others. In 2019, generative pre-trained transformer (or "GPT")...

234 KB (23,765 words) - 00:39, 27 July 2024

(2022-10-01). "GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers". arXiv:2210.17323 [cs.LG]. Dettmers, Tim; Svirschevski,...

135 KB (12,303 words) - 04:25, 26 July 2024

intelligence powered gaslighting of the entire world population." Generative pre-trained transformers (GPTs) are a class of large language models (LLMs) that employ...

30 KB (2,769 words) - 01:11, 28 July 2024

IBM Granite (category Generative artificial intelligence)

data and generative AI platform Watsonx along with other models, IBM opened the source code of some code models. Granite models are trained on datasets...

6 KB (474 words) - 07:15, 17 July 2024

can also analyze images. Claude models are generative pre-trained transformers. They have been pre-trained to predict the next word in large amounts of...

12 KB (1,185 words) - 15:04, 23 July 2024

BLOOM (language model) (category Generative pre-trained transformers)

176-billion-parameter transformer-based autoregressive large language model (LLM). The model, as well as the code base and the data used to train it, are distributed...

4 KB (496 words) - 20:56, 28 May 2024

OpenAI Codex (category Generative pre-trained transformers)

Research Access Program. Based on GPT-3, a neural network trained on text, Codex was additionally trained on 159 gigabytes of Python code from 54 million GitHub...

13 KB (1,306 words) - 01:56, 1 April 2024

example, generative pre-trained transformers (GPT), which use the transformer architecture, have become common to build sophisticated chatbots. The "pre-training"...

69 KB (6,595 words) - 13:08, 23 July 2024

GigaChat (category Generative pre-trained transformers)

GigaChat is a chatbot developed by financial services company Sberbank and launched in April 2023. It is positioned as a Russian alternative to ChatGPT...

3 KB (228 words) - 19:11, 23 June 2024

changing, and that is created by a system Generative pre-trained transformer – Type of large language model Generative science – Study of how complex behaviour...

4 KB (485 words) - 17:19, 24 June 2024

regulatory authorities on August 31, 2023. "Ernie 3.0", the language model, was trained with 10 billion parameters of 4 terrabyte (TB) corpus which consists of...

15 KB (1,416 words) - 21:43, 2 July 2024

Microsoft Copilot (category Generative pre-trained transformers)

Microsoft Copilot is a generative artificial intelligence chatbot developed by Microsoft. Based on a large language model, it was launched in February...

53 KB (4,771 words) - 21:58, 15 July 2024

Internet, medicine, and artificial intelligence, in particular generative pre-trained transformers. In economics, it is theorized that initial adoption of a...

12 KB (823 words) - 02:38, 14 July 2024