In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D KL ( P ∥ Q ) {\displaystyle...

72 KB (12,414 words) - 21:33, 10 July 2024

as information radius (IRad) or total divergence to the average. It is based on the Kullback–Leibler divergence, with some notable (and useful) differences...

16 KB (2,299 words) - 06:02, 1 May 2024

called Kullback–Leibler divergence), which is central to information theory. There are numerous other specific divergences and classes of divergences, notably...

20 KB (2,629 words) - 12:06, 15 February 2024

George Washington University. The Kullback–Leibler divergence is named after Kullback and Richard Leibler. Kullback was born to Jewish parents in Brooklyn...

9 KB (870 words) - 07:37, 31 May 2024

The only divergence on Γ n {\displaystyle \Gamma _{n}} that is both a Bregman divergence and an f-divergence is the Kullback–Leibler divergence. If n ≥...

26 KB (4,434 words) - 13:48, 23 June 2024

of ln(xk) equal to ln(λk) − γ {\displaystyle \gamma } . The Kullback–Leibler divergence between two Weibulll distributions is given by D KL ( W e i b...

37 KB (5,702 words) - 20:21, 4 July 2024

at the National Security Agency, he and Solomon Kullback formulated the Kullback–Leibler divergence, a measure of similarity between probability distributions...

4 KB (273 words) - 17:38, 25 May 2024

Maximum likelihood estimation (section Relation to minimizing Kullback–Leibler divergence and cross entropy)

Q_{\hat {\theta }}} ) that has a minimal distance, in terms of Kullback–Leibler divergence, to the real probability distribution from which our data were...

66 KB (9,626 words) - 01:12, 8 July 2024

dimensionality of the vector space, and the result has units of nats. The Kullback–Leibler divergence from N 1 ( μ 1 , Σ 1 ) {\displaystyle {\mathcal {N}}_{1}({\boldsymbol...

65 KB (9,511 words) - 11:03, 12 July 2024

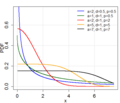

Rényi entropy (redirect from Renyi divergence)

defined a spectrum of divergence measures generalising the Kullback–Leibler divergence. The Rényi divergence of order α or alpha-divergence of a distribution...

21 KB (3,449 words) - 05:51, 15 May 2024

(X)=k+\ln \theta +\ln \Gamma (k)+(1-k)\psi (k).} The Kullback–Leibler divergence (KL-divergence), of Gamma(αp, βp) ("true" distribution) from Gamma(αq...

60 KB (8,739 words) - 23:14, 6 July 2024

P_{Y})} where D K L {\displaystyle D_{\mathrm {KL} }} is the Kullback–Leibler divergence, and P X ⊗ P Y {\displaystyle P_{X}\otimes P_{Y}} is the outer...

57 KB (8,716 words) - 22:04, 11 July 2024

normal distributions as special cases. Kullback-Leibler divergence (KLD) is a method using for compute the divergence or similarity between two probability...

23 KB (2,748 words) - 05:34, 25 May 2024

=e^{1-\lambda x}\}=e^{1-\lambda x}\end{aligned}}} The directed Kullback–Leibler divergence in nats of e λ {\displaystyle e^{\lambda }} ("approximating"...

42 KB (6,567 words) - 10:39, 19 June 2024

the Kullback-Leibler divergence between Cauchy distributions". arXiv:1905.10965 [cs.IT]. Nielsen, Frank; Okamura, Kazuki (2023). "On f-Divergences Between...

45 KB (6,871 words) - 11:17, 23 April 2024

of the relative entropy (i.e., the Kullback–Leibler divergence); specifically, it is the Hessian of the divergence. Alternately, it can be understood...

26 KB (4,691 words) - 20:45, 27 June 2024

In probability theory and statistics, the normal-inverse-gamma distribution (or Gaussian-inverse-gamma distribution) is a four-parameter family of multivariate...

12 KB (2,039 words) - 15:49, 7 January 2024

fit to the distribution) because the ELBO includes a Kullback-Leibler divergence (KL divergence) term which decreases the ELBO due to an internal part...

19 KB (4,047 words) - 04:54, 25 June 2024

has a well-specified asymptotic distribution. The Kullback–Leibler divergence (or information divergence, information gain, or relative entropy) is a way...

55 KB (7,138 words) - 17:27, 27 June 2024

} The G-test statistic is proportional to the Kullback–Leibler divergence of the theoretical distribution from the empirical distribution:...

16 KB (2,505 words) - 14:34, 2 February 2024

Divergence (statistics), a measure of dissimilarity between probability measures Bregman divergence f-divergence Jensen–Shannon divergence Kullback–Leibler...

3 KB (402 words) - 08:50, 1 July 2023

functions of two generalized gamma distributions, then their Kullback-Leibler divergence is given by D K L ( f 1 ∥ f 2 ) = ∫ 0 ∞ f 1 ( x ; a 1 , d 1 ...

8 KB (1,208 words) - 04:44, 26 May 2024

be formulated using the Kullback–Leibler divergence D K L ( p ∥ q ) {\displaystyle D_{\mathrm {KL} }(p\parallel q)} , divergence of p {\displaystyle p}...

18 KB (3,196 words) - 12:49, 9 July 2024

density functions of two Gompertz distributions, then their Kullback-Leibler divergence is given by D K L ( f 1 ∥ f 2 ) = ∫ 0 ∞ f 1 ( x ; b 1 , η 1 )...

12 KB (1,388 words) - 08:13, 3 June 2024

points in the low-dimensional map, and it minimizes the Kullback–Leibler divergence (KL divergence) between the two distributions with respect to the locations...

13 KB (1,834 words) - 17:08, 12 July 2024

(X|Y)=\mathrm {H} (X,Y)-\mathrm {H} (Y).\,} The Kullback–Leibler divergence (or information divergence, information gain, or relative entropy) is a way...

12 KB (2,183 words) - 09:07, 19 May 2024

variation distance (or statistical distance) in terms of the Kullback–Leibler divergence. The inequality is tight up to constant factors. Pinsker's inequality...

8 KB (1,356 words) - 17:26, 18 March 2024

theory and machine learning, information gain is a synonym for Kullback–Leibler divergence; the amount of information gained about a random variable or...

21 KB (3,001 words) - 04:04, 17 December 2023

that the Kullback–Leibler divergence is non-negative. Another inequality concerning the Kullback–Leibler divergence is known as Kullback's inequality...

12 KB (1,804 words) - 14:04, 1 April 2024

Fisher information (section F-divergence)

information is related to relative entropy. The relative entropy, or Kullback–Leibler divergence, between two distributions p {\displaystyle p} and q {\displaystyle...

50 KB (7,564 words) - 04:10, 9 July 2024