Low-rank matrix approximations are essential tools in the application of kernel methods to large-scale learning problems. Kernel methods (for instance...

14 KB (2,272 words) - 01:07, 20 June 2025

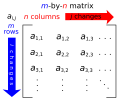

In mathematics, low-rank approximation refers to the process of approximating a given matrix by a matrix of lower rank. More precisely, it is a minimization...

22 KB (3,884 words) - 11:38, 8 April 2025

Singular value decomposition (redirect from Matrix approximation)

decomposition of the original matrix M , {\displaystyle \mathbf {M} ,} but rather provides the optimal low-rank matrix approximation M ~ {\displaystyle {\tilde...

91 KB (14,592 words) - 16:06, 16 June 2025

can be used in the same way as the low-rank approximation of the singular value decomposition (SVD). CUR approximations are less accurate than the SVD, but...

6 KB (981 words) - 07:25, 17 June 2025

or is low-rank. For example, one may assume the matrix has low-rank structure, and then seek to find the lowest rank matrix or, if the rank of the completed...

39 KB (6,402 words) - 16:38, 27 June 2025

Analysis (PCA) exploit SVD: singular value decomposition yields low-rank approximations of data, effectively treating the data covariance as singular by...

12 KB (1,579 words) - 01:41, 29 June 2025

of these approximation methods can be expressed in purely linear algebraic or functional analytic terms as matrix or function approximations. Others are...

12 KB (2,033 words) - 15:51, 26 November 2024

matrices (H-matrices) are used as data-sparse approximations of non-sparse matrices. While a sparse matrix of dimension n {\displaystyle n} can be represented...

15 KB (2,149 words) - 21:04, 14 April 2025

such norms are referred to as matrix norms. Matrix norms differ from vector norms in that they must also interact with matrix multiplication. Given a field...

28 KB (4,788 words) - 21:25, 24 May 2025

In linear algebra, a Hankel matrix (or catalecticant matrix), named after Hermann Hankel, is a rectangular matrix in which each ascending skew-diagonal...

8 KB (1,249 words) - 21:04, 14 April 2025

Locality-sensitive hashing Log-linear model Logistic model tree Low-rank approximation Low-rank matrix approximations MATLAB MIMIC (immunology) MXNet Mallet (software...

39 KB (3,386 words) - 19:51, 2 June 2025

Model compression (section Low-rank factorization)

accelerates matrix multiplication by W {\displaystyle W} . Low-rank approximations can be found by singular value decomposition (SVD). The choice of rank for...

11 KB (1,145 words) - 14:54, 24 June 2025

Principal component analysis (category Matrix decompositions)

Kernel PCA L1-norm principal component analysis Low-rank approximation Matrix decomposition Non-negative matrix factorization Nonlinear dimensionality reduction...

117 KB (14,851 words) - 03:05, 30 June 2025

Woodbury matrix identity – named after Max A. Woodbury – says that the inverse of a rank-k correction of some matrix can be computed by doing a rank-k correction...

17 KB (2,090 words) - 21:09, 14 April 2025

except using approximations of the derivatives of the functions in place of exact derivatives. Newton's method requires the Jacobian matrix of all partial...

19 KB (2,264 words) - 13:41, 30 June 2025

one wishes to compare p ( x , y ) {\displaystyle p(x,y)} to a low-rank matrix approximation in some unknown variable w {\displaystyle w} ; that is, to what...

56 KB (8,853 words) - 23:22, 5 June 2025

eigenvalues is equal to the rank of the matrix A, and also the dimension of the image (or range) of the corresponding matrix transformation, as well as...

40 KB (5,590 words) - 01:51, 27 February 2025

(singular-value decomposition) which computes the low-rank approximation of a single matrix (or a set of 1D vectors). Let matrix X = [ x 1 , … , x n ] {\displaystyle...

3 KB (518 words) - 19:10, 28 September 2023

Kernel (linear algebra) (redirect from Kernel (matrix))

their rank: because of the rounding errors, a floating-point matrix has almost always a full rank, even when it is an approximation of a matrix of a much...

24 KB (3,724 words) - 13:03, 11 June 2025

V.; Lim, L. (2008). "Tensor Rank and the Ill-Posedness of the Best Low-Rank Approximation Problem". SIAM Journal on Matrix Analysis and Applications. 30...

36 KB (6,321 words) - 21:48, 6 June 2025

Latent semantic analysis (section Occurrence matrix)

occurrence matrix, LSA finds a low-rank approximation to the term-document matrix. There could be various reasons for these approximations: The original...

58 KB (7,613 words) - 03:53, 2 June 2025

total number of pages. The PageRank values are the entries of the dominant right eigenvector of the modified adjacency matrix rescaled so that each column...

71 KB (8,808 words) - 00:14, 2 June 2025

Kronecker product (category Matrix theory)

rank of a matrix equals the number of nonzero singular values, we find that rank ( A ⊗ B ) = rank A rank B . {\displaystyle \operatorname {rank}...

41 KB (6,211 words) - 06:41, 24 June 2025

includes a quality of data approximation and some penalty terms for the bending of the manifold. The popular initial approximations are generated by linear...

48 KB (6,119 words) - 04:01, 2 June 2025

j]} element is incremented. The Spearman's rank correlation can then be computed, based on the count matrix M {\displaystyle M} , using linear algebra...

33 KB (4,320 words) - 00:47, 18 June 2025

LU decomposition (category Matrix decompositions)

find a low rank approximation to an LU decomposition using a randomized algorithm. Given an input matrix A {\textstyle A} and a desired low rank k {\textstyle...

54 KB (8,677 words) - 22:02, 11 June 2025

least squares approximation of the data is generically equivalent to the best, in the Frobenius norm, low-rank approximation of the data matrix. In the least...

20 KB (3,298 words) - 16:34, 28 October 2024

In mathematics, a Euclidean distance matrix is an n×n matrix representing the spacing of a set of n points in Euclidean space. For points x 1 , x 2 ,...

17 KB (2,448 words) - 07:00, 17 June 2025

that retains all the defining properties of the matrix SVD. The matrix SVD simultaneously yields a rank-𝑅 decomposition and orthonormal subspaces for...

27 KB (4,394 words) - 02:19, 29 June 2025

parameters, and so continuous approximations or bounds on evaluation measures have to be used. For example the SoftRank algorithm. LambdaMART is a pairwise...

54 KB (4,442 words) - 08:47, 30 June 2025