Stochastic gradient descent (often abbreviated SGD) is an iterative method for optimizing an objective function with suitable smoothness properties (e...

52 KB (6,880 words) - 00:02, 15 October 2024

of gradient descent, stochastic gradient descent, serves as the most basic algorithm used for training most deep networks today. Gradient descent is based...

37 KB (5,311 words) - 03:39, 20 October 2024

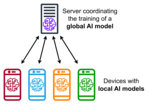

Federated learning (redirect from Federated stochastic gradient descent)

of stochastic gradient descent, where gradients are computed on a random subset of the total dataset and then used to make one step of the gradient descent...

50 KB (5,762 words) - 05:50, 25 September 2024

Online machine learning (redirect from Incremental stochastic gradient descent)

out-of-core versions of machine learning algorithms, for example, stochastic gradient descent. When combined with backpropagation, this is currently the de...

25 KB (4,740 words) - 11:23, 30 August 2024

Stochastic gradient Langevin dynamics (SGLD) is an optimization and sampling technique composed of characteristics from Stochastic gradient descent, a...

9 KB (1,370 words) - 15:18, 4 October 2024

Gradient descent Stochastic gradient descent Wolfe conditions Absil, P. A.; Mahony, R.; Andrews, B. (2005). "Convergence of the iterates of Descent methods...

29 KB (4,572 words) - 20:09, 28 July 2024

Backpropagation (section Second-order gradient descent)

learning algorithm – including how the gradient is used, such as by stochastic gradient descent, or as an intermediate step in a more complicated optimizer,...

54 KB (7,445 words) - 21:46, 13 October 2024

Reparameterization trick (category Stochastic optimization)

enabling the optimization of parametric probability models using stochastic gradient descent, and the variance reduction of estimators. It was developed in...

11 KB (1,703 words) - 08:29, 14 October 2024

desired result. In stochastic gradient descent, we have a function to minimize f ( x ) {\textstyle f(x)} , but we cannot sample its gradient directly. Instead...

18 KB (3,281 words) - 12:34, 19 October 2024

being stuck at local minima. One can also apply a widespread stochastic gradient descent method with iterative projection to solve this problem. The idea...

23 KB (3,498 words) - 08:18, 10 September 2024

for all nodes in the tree. Typically, stochastic gradient descent (SGD) is used to train the network. The gradient is computed using backpropagation through...

9 KB (954 words) - 13:42, 25 August 2024

descent Stochastic gradient descent Coordinate descent Frank–Wolfe algorithm Landweber iteration Random coordinate descent Conjugate gradient method Derivation...

1 KB (109 words) - 05:36, 17 April 2022

See the brief discussion in Stochastic gradient descent. Bhatnagar, S., Prasad, H. L., and Prashanth, L. A. (2013), Stochastic Recursive Algorithms for Optimization:...

9 KB (1,555 words) - 13:56, 4 October 2024

Hyperparameter (machine learning) Hyperparameter optimization Stochastic gradient descent Variable metric methods Overfitting Backpropagation AutoML Model...

9 KB (1,108 words) - 10:15, 30 April 2024

of selection can vary with the steepness of the uphill move." Stochastic gradient descent Russell, S.; Norvig, P. (2010). Artificial Intelligence: A Modern...

749 bytes (69 words) - 15:25, 27 May 2022

Neural network (machine learning) (redirect from Stochastic neural network)

"gates." The first deep learning multilayer perceptron trained by stochastic gradient descent was published in 1967 by Shun'ichi Amari. In computer experiments...

159 KB (16,821 words) - 16:20, 19 October 2024

Methods of this class include: stochastic approximation (SA), by Robbins and Monro (1951) stochastic gradient descent finite-difference SA by Kiefer and...

12 KB (1,068 words) - 01:33, 5 August 2024

in machine learning and data compression. His work presents stochastic gradient descent as a fundamental learning algorithm. He is also one of the main...

7 KB (636 words) - 02:38, 14 May 2024

Preconditioner (redirect from Preconditioned gradient descent)

grids. If used in gradient descent methods, random preconditioning can be viewed as an implementation of stochastic gradient descent and can lead to faster...

22 KB (3,511 words) - 04:16, 29 April 2024

introduced the view of boosting algorithms as iterative functional gradient descent algorithms. That is, algorithms that optimize a cost function over...

28 KB (4,208 words) - 19:54, 2 October 2024

{if}}~{\mathsf {B}}~{\textrm {wins}},\end{cases}}} and, using the stochastic gradient descent the log loss is minimized as follows: R A ← R A − η d ℓ d R A...

88 KB (11,606 words) - 02:17, 18 October 2024

Slope (redirect from Gradient of a line)

gradient method, generalizes the conjugate gradient method to nonlinear optimization Stochastic gradient descent, iterative method for optimizing a differentiable...

18 KB (2,647 words) - 20:06, 10 October 2024

Stochastic gradient descent Stochastic variance reduction Toulis, Panos; Airoldi, Edoardo (2015). "Scalable estimation strategies based on stochastic...

27 KB (4,148 words) - 07:02, 5 September 2024

(difference between the desired and the actual signal). It is a stochastic gradient descent method in that the filter is only adapted based on the error...

16 KB (3,045 words) - 22:57, 1 May 2024

Amari reported the first multilayered neural network trained by stochastic gradient descent, was able to classify non-linearily separable pattern classes...

16 KB (1,929 words) - 06:24, 19 October 2024

method – Method for finding stationary points of a function Stochastic gradient descent – Optimization algorithm – uses one example at a time, rather...

13 KB (1,649 words) - 00:59, 29 September 2024

Empirically, feature scaling can improve the convergence speed of stochastic gradient descent. In support vector machines, it can reduce the time to find support...

8 KB (1,041 words) - 01:18, 24 August 2024

using only a stochastic gradient, at a 1 / n {\displaystyle 1/n} lower cost than gradient descent. Accelerated methods in the stochastic variance reduction...

12 KB (1,858 words) - 18:27, 1 October 2024

approaches, including stochastic gradient descent for training deep neural networks, and ensemble methods (such as random forests and gradient boosted trees)...

30 KB (4,617 words) - 18:27, 19 September 2024

end It also has StochasticGradient class for training a neural network using stochastic gradient descent, although the optim package provides...

9 KB (863 words) - 03:28, 7 September 2024