In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y {\displaystyle...

11 KB (2,071 words) - 00:39, 12 July 2024

The conditional quantum entropy is an entropy measure used in quantum information theory. It is a generalization of the conditional entropy of classical...

4 KB (582 words) - 09:24, 6 February 2023

Despite similar notation, joint entropy should not be confused with cross-entropy. The conditional entropy or conditional uncertainty of X given random...

59 KB (7,563 words) - 06:04, 2 November 2024

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential...

70 KB (10,018 words) - 00:46, 4 October 2024

to a stochastic process. For a strongly stationary process, the conditional entropy for latest random variable eventually tend towards this rate value...

5 KB (781 words) - 00:37, 19 June 2024

for example, differential entropy may be negative. The differential analogies of entropy, joint entropy, conditional entropy, and mutual information are...

12 KB (2,183 words) - 12:22, 14 August 2024

Shannon entropy and its quantum generalization, the von Neumann entropy, one can define a conditional version of min-entropy. The conditional quantum...

14 KB (2,663 words) - 13:40, 16 September 2024

In information theory, the cross-entropy between two probability distributions p {\displaystyle p} and q {\displaystyle q} , over the same underlying...

19 KB (3,247 words) - 09:04, 20 October 2024

Kullback–Leibler divergence (redirect from Kullback–Leibler entropy)

statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D KL ( P ∥ Q ) {\displaystyle D_{\text{KL}}(P\parallel...

73 KB (12,534 words) - 13:54, 28 October 2024

y,z)dxdydz.} Alternatively, we may write in terms of joint and conditional entropies as I ( X ; Y | Z ) = H ( X , Z ) + H ( Y , Z ) − H ( X , Y , Z )...

11 KB (2,385 words) - 18:48, 11 July 2024

{H} (X_{1})+\ldots +\mathrm {H} (X_{n})} Joint entropy is used in the definition of conditional entropy: 22 H ( X | Y ) = H ( X , Y ) − H ( Y ) {\displaystyle...

7 KB (952 words) - 06:39, 16 July 2024

the joint entropy. This is equivalent to the fact that the conditional quantum entropy may be negative, while the classical conditional entropy may never...

5 KB (827 words) - 13:37, 16 August 2023

joint, conditional differential entropy, and relative entropy are defined in a similar fashion. Unlike the discrete analog, the differential entropy has...

22 KB (2,728 words) - 00:23, 17 July 2024

information theoretic Shannon entropy. The von Neumann entropy is also used in different forms (conditional entropies, relative entropies, etc.) in the framework...

21 KB (3,026 words) - 19:19, 9 September 2024

Mutual information (redirect from Mutual entropy)

marginal entropies, H ( X ∣ Y ) {\displaystyle \mathrm {H} (X\mid Y)} and H ( Y ∣ X ) {\displaystyle \mathrm {H} (Y\mid X)} are the conditional entropies, and...

57 KB (8,727 words) - 16:23, 24 September 2024

( Y ∣ X ) {\displaystyle H(Y\mid X)} are the entropy of the output signal Y and the conditional entropy of the output signal given the input signal, respectively:...

14 KB (2,231 words) - 18:40, 27 April 2024

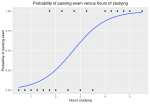

Logistic regression (redirect from Conditional logit analysis)

X)\end{aligned}}} where H ( Y ∣ X ) {\displaystyle H(Y\mid X)} is the conditional entropy and D KL {\displaystyle D_{\text{KL}}} is the Kullback–Leibler divergence...

127 KB (20,643 words) - 21:34, 15 October 2024

of Secrecy Systems conditional entropy conditional quantum entropy confusion and diffusion cross-entropy data compression entropic uncertainty (Hirchman...

1 KB (93 words) - 09:42, 8 August 2023

In statistics, a maximum-entropy Markov model (MEMM), or conditional Markov model (CMM), is a graphical model for sequence labeling that combines features...

7 KB (1,025 words) - 16:43, 13 January 2021

quantum relative entropy is a measure of distinguishability between two quantum states. It is the quantum mechanical analog of relative entropy. For simplicity...

13 KB (2,405 words) - 00:45, 29 December 2022

of random variables and a measure over sets. Namely the joint entropy, conditional entropy, and mutual information can be considered as the measure of a...

12 KB (1,762 words) - 23:53, 6 September 2024

conditional entropy of T {\displaystyle T} given the value of attribute a {\displaystyle a} . This is intuitively plausible when interpreting entropy...

21 KB (3,024 words) - 05:43, 28 September 2024

variable e in terms of its entropy. One can then subtract the content of e that is irrelevant to h (given by its conditional entropy conditioned on h) from...

12 KB (1,798 words) - 14:10, 31 October 2024

Rényi entropy is a quantity that generalizes various notions of entropy, including Hartley entropy, Shannon entropy, collision entropy, and min-entropy. The...

21 KB (3,481 words) - 00:23, 23 October 2024

H\left(X|Y\right)=-\sum _{i,j}P(x_{i},y_{j})\log P\left(x_{i}|y_{j}\right)} is the conditional entropy, P ( e ) = P ( X ≠ X ~ ) {\displaystyle P(e)=P(X\neq {\tilde {X}})}...

7 KB (1,480 words) - 16:35, 24 October 2024

Information diagram (redirect from Entropy diagram)

relationships among Shannon's basic measures of information: entropy, joint entropy, conditional entropy and mutual information. Information diagrams are a useful...

3 KB (494 words) - 06:20, 4 March 2024

identically-distributed random variable, and the operational meaning of the Shannon entropy. Named after Claude Shannon, the source coding theorem shows that, in the...

12 KB (1,881 words) - 09:30, 2 May 2024

entropy of X. The above definition of transfer entropy has been extended by other types of entropy measures such as Rényi entropy. Transfer entropy is...

10 KB (1,293 words) - 17:15, 7 July 2024

that quantum conditional entropies can be negative, and quantum mutual informations can exceed the classical bound of the marginal entropy. The strong...

29 KB (4,679 words) - 21:49, 13 January 2024

{\displaystyle H} is simply the entropy of a symbol) and the continuous-valued case (where H {\displaystyle H} is the differential entropy instead). The definition...

22 KB (3,951 words) - 12:22, 22 September 2024