In probability theory and statistics, a Markov chain or Markov process is a stochastic process describing a sequence of possible events in which the probability...

94 KB (12,750 words) - 22:22, 19 December 2024

In statistics, Markov chain Monte Carlo (MCMC) is a class of algorithms used to draw samples from a probability distribution. Given a probability distribution...

29 KB (3,126 words) - 05:23, 19 December 2024

A hidden Markov model (HMM) is a Markov model in which the observations are dependent on a latent (or hidden) Markov process (referred to as X {\displaystyle...

52 KB (6,811 words) - 04:08, 22 December 2024

examples of Markov chains and Markov processes in action. All examples are in the countable state space. For an overview of Markov chains in general state...

14 KB (2,429 words) - 21:01, 8 July 2024

A continuous-time Markov chain (CTMC) is a continuous stochastic process in which, for each state, the process will change state according to an exponential...

23 KB (4,241 words) - 22:53, 10 December 2024

Markov chain geostatistics uses Markov chain spatial models, simulation algorithms and associated spatial correlation measures (e.g., transiogram) based...

2 KB (234 words) - 15:05, 12 September 2021

In the mathematical theory of probability, an absorbing Markov chain is a Markov chain in which every state can reach an absorbing state. An absorbing...

12 KB (1,762 words) - 20:08, 14 December 2024

In mathematics, the quantum Markov chain is a reformulation of the ideas of a classical Markov chain, replacing the classical definitions of probability...

2 KB (201 words) - 21:28, 18 January 2022

In probability, a discrete-time Markov chain (DTMC) is a sequence of random variables, known as a stochastic process, in which the value of the next variable...

25 KB (4,252 words) - 01:57, 27 June 2024

The Lempel–Ziv–Markov chain algorithm (LZMA) is an algorithm used to perform lossless data compression. It has been used in the 7z format of the 7-Zip...

31 KB (3,582 words) - 05:02, 19 December 2024

distribution of a previous state. An example use of a Markov chain is Markov chain Monte Carlo, which uses the Markov property to prove that a particular method...

10 KB (1,175 words) - 07:55, 18 July 2024

from its connection to Markov chains, a concept developed by the Russian mathematician Andrey Markov. The "Markov" in "Markov decision process" refers...

34 KB (5,086 words) - 15:40, 20 December 2024

known as the Markov chain. He was also a strong, close to master-level, chess player. Markov and his younger brother Vladimir Andreyevich Markov (1871–1897)...

10 KB (1,072 words) - 15:39, 28 November 2024

stochastic process satisfying the Markov property is known as a Markov chain. A stochastic process has the Markov property if the conditional probability...

8 KB (1,126 words) - 01:02, 5 December 2024

Stochastic matrix (redirect from Markov transition matrix)

stochastic matrix is a square matrix used to describe the transitions of a Markov chain. Each of its entries is a nonnegative real number representing a probability...

19 KB (2,798 words) - 20:31, 26 November 2024

counting measures. The Markov chain is ergodic, so the shift example from above is a special case of the criterion. Markov chains with recurring communicating...

55 KB (8,917 words) - 13:22, 8 December 2024

characterize continuous-time Markov processes. In particular, they describe how the probability of a continuous-time Markov process in a certain state changes...

9 KB (1,405 words) - 01:15, 31 August 2024

Markov chain is the time until the Markov chain is "close" to its steady state distribution. More precisely, a fundamental result about Markov chains...

5 KB (604 words) - 20:16, 9 July 2024

Random walk (section As a Markov chain)

) {\displaystyle O(a+b)} in the general one-dimensional random walk Markov chain. Some of the results mentioned above can be derived from properties of...

55 KB (7,649 words) - 13:39, 25 November 2024

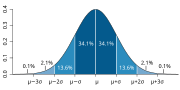

In the mathematical theory of random processes, the Markov chain central limit theorem has a conclusion somewhat similar in form to that of the classic...

6 KB (1,166 words) - 00:34, 19 June 2024

Detailed balance (redirect from Reversible markov chain)

balance in kinetics seem to be clear. A Markov process is called a reversible Markov process or reversible Markov chain if there exists a positive stationary...

36 KB (5,848 words) - 15:24, 17 December 2024

Library of Congress Card Catalog Number 65-17394. "We may think of a Markov chain as a process that moves successively through a set of states s1, s2,...

40 KB (4,523 words) - 23:47, 9 December 2024

Chapman–Kolmogorov equation (category Markov processes)

equation Examples of Markov chains Category of Markov kernels Perrone (2024), pp. 10–11 Pavliotis, Grigorios A. (2014). "Markov Processes and the Chapman–Kolmogorov...

6 KB (1,003 words) - 10:11, 1 October 2024

computationally intensive statistical methods including resampling methods, Markov chain Monte Carlo methods, local regression, kernel density estimation, artificial...

14 KB (1,443 words) - 07:17, 1 November 2024

scientists. Markov processes and Markov chains are named after Andrey Markov who studied Markov chains in the early 20th century. Markov was interested...

168 KB (18,653 words) - 09:51, 14 December 2024

Eigenvalues and eigenvectors (section Markov chains)

components. This vector corresponds to the stationary distribution of the Markov chain represented by the row-normalized adjacency matrix; however, the adjacency...

102 KB (13,609 words) - 13:41, 19 December 2024

and Salvesen introduced a novel time-dependent rating method using the Markov Chain model. They suggested modifying the generalized linear model above for...

17 KB (2,911 words) - 08:28, 26 July 2024

boundary were coined by Judea Pearl in 1988. A Markov blanket can be constituted by a set of Markov chains. A Markov blanket of a random variable Y {\displaystyle...

4 KB (538 words) - 06:28, 15 May 2024

statistics and statistical physics, the Metropolis–Hastings algorithm is a Markov chain Monte Carlo (MCMC) method for obtaining a sequence of random samples...

30 KB (4,556 words) - 16:16, 6 December 2024

processes, such as Markov chains and Poisson processes, can be derived as special cases among the class of Markov renewal processes, while Markov renewal processes...

4 KB (834 words) - 02:10, 13 July 2023