An artificial neural network's learning rule or learning process is a method, mathematical logic or algorithm which improves the network's performance...

9 KB (1,198 words) - 20:00, 27 October 2024

Association rule learning is a rule-based machine learning method for discovering interesting relations between variables in large databases. It is intended...

49 KB (6,704 words) - 22:16, 28 November 2024

Rule-based machine learning (RBML) is a term in computer science intended to encompass any machine learning method that identifies, learns, or evolves...

4 KB (450 words) - 07:17, 11 December 2024

order to make a prediction. Rule-based machine learning approaches include learning classifier systems, association rule learning, and artificial immune systems...

133 KB (14,727 words) - 21:41, 4 January 2025

In statistics and machine learning, ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obtained from...

52 KB (6,574 words) - 06:47, 2 November 2024

middle layer contains recurrent connections that change by a Hebbian learning rule.: 73–75 : Chapter 19, 21 Another model of associative memory is where...

63 KB (8,518 words) - 16:40, 18 December 2024

Reinforcement learning is one of the three basic machine learning paradigms, alongside supervised learning and unsupervised learning. Q-learning at its simplest...

62 KB (7,369 words) - 13:00, 4 January 2025

of learning language and communication, and the stage where a child begins to understand rules and symbols. This has led to a view that learning in organisms...

79 KB (9,982 words) - 08:55, 10 December 2024

Q-learning is a model-free reinforcement learning algorithm that teaches an agent to assign values to each action it might take, conditioned on the agent...

29 KB (3,800 words) - 04:33, 8 December 2024

In machine learning, the delta rule is a gradient descent learning rule for updating the weights of the inputs to artificial neurons in a single-layer...

6 KB (1,104 words) - 04:45, 27 October 2023

Recurrent neural network (redirect from Real-time recurrent learning)

middle layer contains recurrent connections that change by a Hebbian learning rule.: 73–75 Later, in Principles of Neurodynamics (1961), he described...

89 KB (10,298 words) - 16:27, 20 December 2024

ADALINE (section Learning rule)

= ∑ j = 0 n x j w j {\displaystyle y=\sum _{j=0}^{n}x_{j}w_{j}} The learning rule used by ADALINE is the LMS ("least mean squares") algorithm, a special...

9 KB (1,110 words) - 14:12, 14 November 2024

thought to be a substrate for Hebbian learning during development. As suggested by Taylor in 1973, Hebbian learning rules might create informationally efficient...

19 KB (2,389 words) - 22:35, 28 November 2024

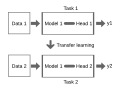

Transfer learning (TL) is a technique in machine learning (ML) in which knowledge learned from a task is re-used in order to boost performance on a related...

15 KB (1,692 words) - 19:53, 27 November 2024

backpropagation, unsupervised learning also employs other methods including: Hopfield learning rule, Boltzmann learning rule, Contrastive Divergence, Wake...

31 KB (2,777 words) - 21:55, 29 November 2024

from are based on a consistent and simple rule. Both offline data collection models, where the model is learning by interacting with a static dataset and...

44 KB (4,969 words) - 11:14, 17 December 2024

Multimodal learning is a type of deep learning that integrates and processes multiple types of data, referred to as modalities, such as text, audio, images...

9 KB (2,338 words) - 08:44, 24 October 2024

Deep reinforcement learning (deep RL) is a subfield of machine learning that combines reinforcement learning (RL) and deep learning. RL considers the problem...

27 KB (2,926 words) - 13:36, 28 June 2024

Deep learning is a subset of machine learning that focuses on utilizing neural networks to perform tasks such as classification, regression, and representation...

183 KB (18,163 words) - 00:40, 5 January 2025

the value function for the current state using the rule: V ( S t ) ← ( 1 − α ) V ( S t ) + α ⏟ learning rate [ R t + 1 + γ V ( S t + 1 ) ⏞ The TD target...

12 KB (1,565 words) - 20:36, 20 October 2024

Active learning is a special case of machine learning in which a learning algorithm can interactively query a human user (or some other information source)...

18 KB (2,205 words) - 16:49, 7 December 2024

A transformer is a deep learning architecture that was developed by researchers at Google and is based on the multi-head attention mechanism, which was...

101 KB (12,612 words) - 22:44, 2 January 2025

weights of an Ising model by Hebbian learning rule as a model of associative memory, adding in the component of learning. This was popularized as the Hopfield...

162 KB (17,167 words) - 06:39, 28 December 2024

In machine learning and pattern recognition, a feature is an individual measurable property or characteristic of a data set. Choosing informative, discriminating...

9 KB (1,027 words) - 20:39, 23 December 2024

Decision tree learning is a supervised learning approach used in statistics, data mining and machine learning. In this formalism, a classification or...

47 KB (6,524 words) - 12:39, 16 July 2024

learning rule for training neural networks, called the 'novelty rule', to help alleviate catastrophic interference. As its name suggests, this rule helps...

34 KB (4,476 words) - 04:31, 9 December 2024

Hebbian theory (redirect from Hebbian learning)

during the learning process. It was introduced by Donald Hebb in his 1949 book The Organization of Behavior. The theory is also called Hebb's rule, Hebb's...

23 KB (3,310 words) - 21:56, 11 December 2024

In machine learning (ML), boosting is an ensemble metaheuristic for primarily reducing bias (as opposed to variance). It can also improve the stability...

21 KB (2,240 words) - 19:42, 4 January 2025

Attention is a machine learning method that determines the relative importance of each component in a sequence relative to the other components in that...

49 KB (5,427 words) - 20:14, 27 December 2024

Feedforward neural network (section Learning)

The Journal of Machine Learning Research. 3: 1137–1155. Auer, Peter; Harald Burgsteiner; Wolfgang Maass (2008). "A learning rule for very simple universal...

21 KB (2,239 words) - 18:53, 30 December 2024